Flink processing keyed streams with or without windowing

声明: 本文来自 Poe 的回答。

In Apache Flink, processing keyed streams can be done with or without windowing, and each approach serves different use cases. Here’s a comparison of the two:

Processing Keyed Streams Without Windowing

Characteristics:

- Continuous Processing: Data is processed as it arrives, without grouping into windows.

- Stateful Operations: You can maintain state across events for each key using operations like keyBy(), map(), or reduce().

- Event-Time Handling: You can handle events in real time, allowing for low-latency processing.

- Use Cases: Suitable for applications that need to respond immediately to each event, such as real-time analytics, alerting systems, or monitoring applications.

Example:

DataStream<Event> stream = // your source stream

stream.keyBy(event -> event.getKey())

.process(new ProcessFunction<Event, Result>() {

@Override

public void processElement(Event value, Context ctx, Collector<Result> out) {

// Processing logic here

}

});

Processing Keyed Streams With Windowing

Characteristics:

- Batch Processing: Data is grouped into finite windows based on time or count before processing.

- Aggregation: Commonly used for calculating aggregates over a specific time frame (e.g., sum, average).

- Window Types: Flink supports different window types, such as tumbling, sliding, and session windows.

- Event-Time and Processing-Time: You can specify whether to use event-time or processing-time semantics for windowing.

- Use Cases: Ideal for scenarios where you want to analyze data over intervals, such as calculating metrics per minute, hour, or day.

Example:

DataStream<Event> stream = // your source stream

stream.keyBy(event -> event.getKey())

.window(TumblingEventTimeWindows.of(Time.minutes(1))) // or any other window type

.aggregate(new SumAggregator());

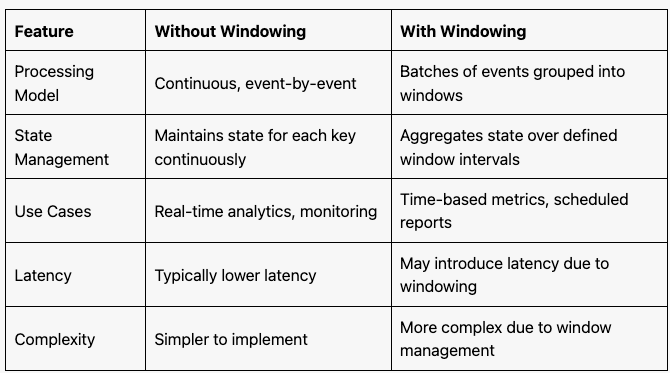

Summary of Differences

Conclusion

- Without Windowing: Use for real-time, low-latency applications that need immediate responses to each event.

- With Windowing: Use for scenarios requiring aggregation or computation over a fixed period, like summarizing data trends over time.